Parallel Reality and Future Implications

You’ve heard of extended reality (XR) technologies like virtual reality (VR), mixed reality (MR), and augmented reality (AR) but have you heard of parallel reality?

Don’t be dismayed if you haven’t heard of this very new technology, but get ready to be hearing the term “parallel reality” more and more—Delta Airlines just deployed a test of the technology at an airport terminal.

In short, travelers can “agree” to be tracked by the technology at time of check-in. As they go through TSA, a large screen in the terminal will greet them and show their flight status information, gate number, etc. At first glance, none of this seems super innovative, but here’s the kicker….

That same screen can be showing 100 different, discrete images to 100 different travelers simultaneously. One hundred people looking at the same screen will be seeing completely different images concurrently.

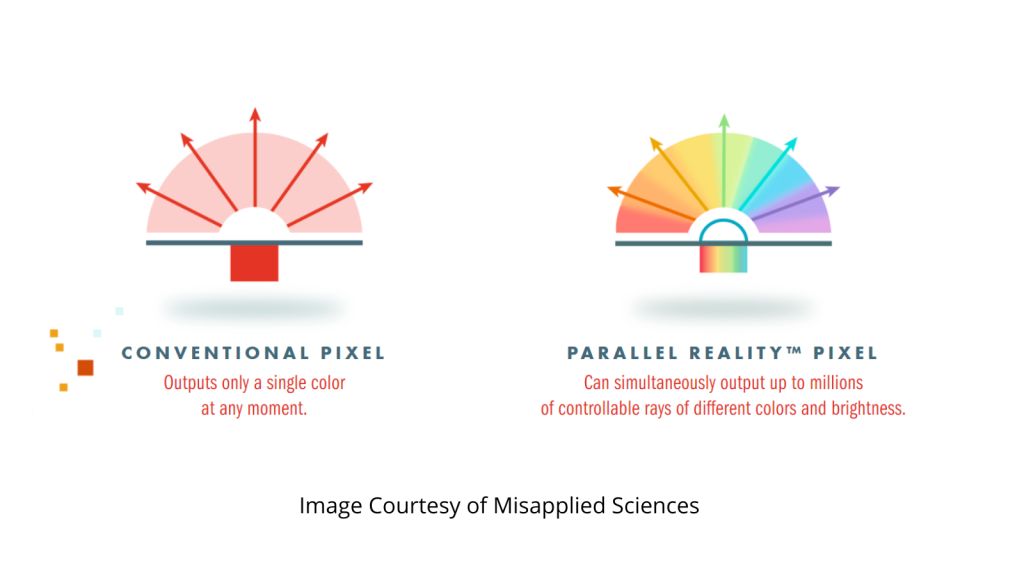

This is what Misapplied Sciences—the firm that developed this technology—is calling parallel reality. But how does it actually work without using glasses or a personal device? Well, the short answer is, they have different pixels.

A standard pixel on a display can only display one color at a time, and displays that one color across the viewing angle of the screen. Parallel reality pixels, on the other hand, are capable of producing multiple colors out of the same pixel and are able to direct each color to a specific location.

Take a look at the graphic below from Misapplied Sciences as it may help you visualize what is happening.

The technology then uses software and sensors like facial recognition cameras to track a specific person and make sure the display is aiming the right “image” in their direction.

Obviously, being able to deliver several discrete images to different people within one venue has tons of applications. Customized content, captioning in multiple languages, different text sizes for visual acuity at different distances, etc., are all areas Misapplied Sciences can assist in developing applications for, and their team doesn’t just make the displays, but can also assist in the content creation, data management, and more.

But do the implications of technology like this extend beyond parallel reality?

Flashback to InfoComm 2012… I took a course on 3D visualization technologies given by a company named Holografika. I also mentioned them in a few blogs for rAVe [PUBS], including this one from 2014.

Holografika was pioneering glasses-free 3D screens a dozen years ago. Now when I say glasses-free, I don’t mean autostereoscopic (two flat, left-eye and right-eye images separated slightly to create the illusion of depth). I mean real 3D, like if there was a car on the screen and you walked to the left, you’d see the hood, if you stood in the middle you’d see the side, and if you walked toward the back you could see the trunk.

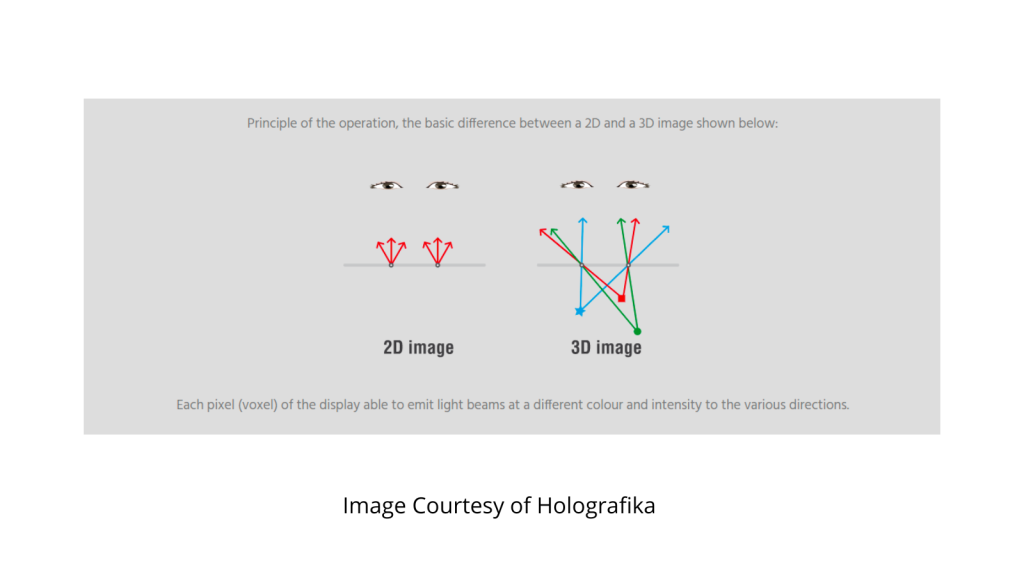

This wasn’t head tracking technology, where the computer tracks the image and adjusts it for the point-of-view of a single user. This screen was simultaneously outputting hundreds of images at different angles through each pixel. Sound familiar?

Holografika’s tech may be slightly different (I’m not sure as I haven’t seen the technical details of the new parallel reality displays), but the general principle is still the same. Holografika called their pixels voxels. Take a look at the tech diagram from their site as well.

It looks familiar too, right?

So the same displays doing parallel reality to deliver 100 different messages to 100 different people could also be used to create 100 different angles of single image to create real parallax and the illusion of depth for 3D models, R&D, etc.

We’ve also seen a lot of LED signage applications where the screens use forced perspective to create 3D style effects. These displays have a “sweet spot” where the effect looks as intended; however, if an observer watches the screen from another angle, the effect breaks down. With parallel reality pixels, the screen could present different angles of the images to different positions—eliminating the sweet spot and widening the area where the 3D style effects can be viewed.

But could there be even more?!?!

One of the most exciting opportunities in AV is the extended reality studio. Popularized by the production of the Mandalorian, these systems utilize large scale LED backdrops to display backgrounds and sets rendered in 3D programs like Unreal Engine and Unity, to give actors, actresses, and even executive marketers and presenters realistic backgrounds without utilizing green screen technology. In an XR studio—or virtual production studio—there is also a camera tracking system that follows the camera position and adjusts the digital background for that point-of-view to create realistic depth and parallax.

In this workflow however, a production team can’t film from multiple camera angles at once, as the LED backdrop uses traditional pixels that can only present one background at a time based on the camera being tracked. But with parallel reality pixels, the backdrop could present multiple views of the background simultaneously. Camera tracking wouldn’t be necessary and multiple cameras could shoot from different angles simultaneously while keeping the proper perspective in relationship to the video backdrop.

With any new opportunity, there comes challenges as well.

First, I will say that the brightness of the parallel reality doesn’t seem overly bright—at least from the video of Delta, but it may just not capture well on camera.

Secondly, when thinking of this from the distribution side, this type of tech represents the need for much higher data rates in AV transport or hundreds of connections. Think about the challenges in bandwidth going from 1080p to 8K. That requires 16x the bandwidth. Now think about sending 100 different views to a screen simultaneously. That would be 100 x 1080p. There are ways to reduce the bandwidth with reduced frame rates or reduced color spaces, but that would obviously limit some of the potential application opportunities above.

Then, of course, there’s the business of creating all the content for these applications in 3D space and having machines powerful enough to do all the parallel processing and output of them. The parallel reality pixel technology is just the beginning of the innovation needed. Misapplied Sciences has this workflow and creation figured out for their discrete messaging applications already and offers the service as part of their solution.

Full frame rate video and XR applications on the other hand seem like they would present quite a different challenge. In any event, watch this space.