AI: From Note-Taker to Changemaker

The topic of artificial intelligence (AI) is obviously one of the most popular subjects in today’s technology landscape. It is used in the AV industry to describe anything from a virtual assistant to camera tracking to personalization of settings in a system.

As a member of my own company’s recently developed AI Center of Excellence, I have been doing my best to not only stay on top of current market trends and product offerings, but also to gain a deeper understanding of how AI works, as well as the several different types of AI that are being explored in the greater ecosystem.

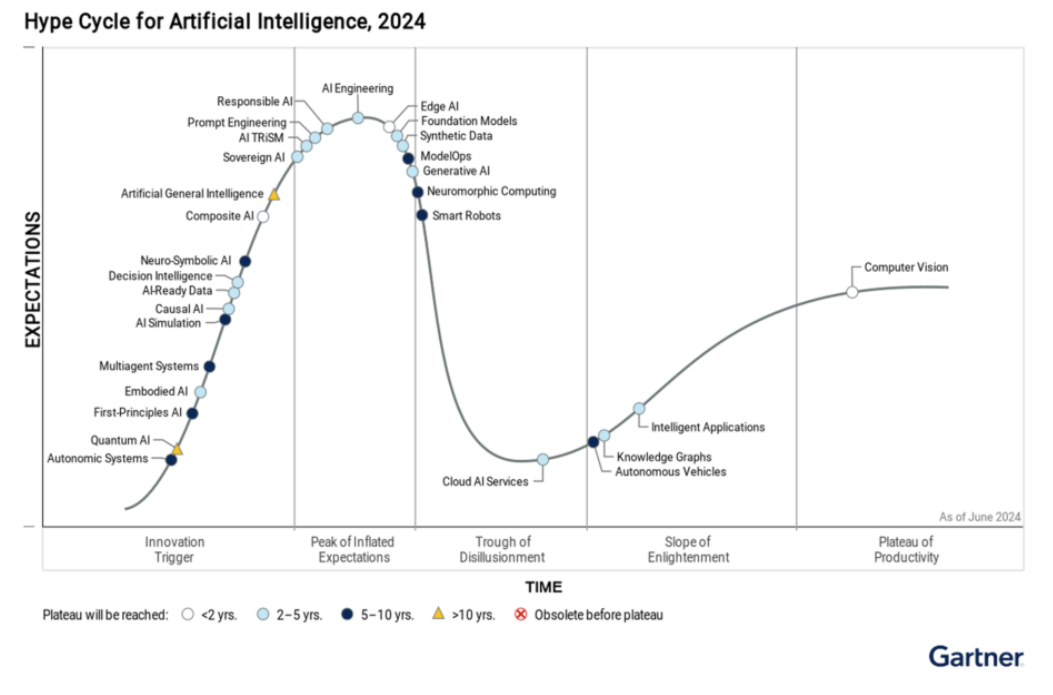

Just looking at something like the Gartner Hype Cycle curve for AI in 2024 will give you an idea of the scale of major AI initiatives and potentially introduce you to new terminology.

This week, I attended a webinar delivered by Qualcomm titled “Integrating the Senses: How AI is Learning to See, Hear, and Interact”. In it, Roland Memisevic, senior director of engineering for Qualcomm Canada, discussed their work in AI and a specific model they’ve created around the idea of using AI as a real time, digital personal trainer for people engaging in exercise.

AI Architecture

Memisevic starts the webinar by giving a primer on how AI architecture has evolved from component models to neural nets where the components combined into groups and have been trained with data to make decisions without necessarily going through each individual step, but rather utilizing the learning to do some predictive reasoning.

If you’re unfamiliar with AI architecture, the first 15 minutes of the webinar were immensely valuable to gain at least some understanding of the complexity involved, but also provided 100 rabbit holes to dive headfirst into, which I personally flagged for continued research and understanding.

The Streaming Challenge

One of the takeaways from Memisevic was that streaming data creates an additional set of challenges, especially when that data may be coming from multiple sensors simultaneously (camera, microphone, etc) and that in fact, most AI models aren’t able to analyze streaming data, but rather do a sort of snapshot to text to token to action process that works but has limitations.

The stated goal of the model Qualcomm developed around fitness training was:

Situated vision-language models

- Process a live video stream in real time and dynamically engage with users

- Determine what to say and when to say it

- Enable the path to humanoids

With the challenges being called out as well:

Open-ended, asynchronous interaction with situated agents is a challenge.

- Limited to turn-based interactions about offline documents or images

- Limited to capturing momentary snapshots of reality in a VQA-style, (Visual Question Answering), dialogue.

What Does This All Mean?

Qualcomm went on to describe the fitness training model they are developing, and I think the application, although not a direct correlation to our industry, helps illustrate a lot of the challenges in a way that is easier to digest.

Imagine setting up your phone on a tripod, turning on the camera, and launching a fitness app.

The application creates a custom workout for you and then asks you to begin.

Let’s say the first exercise is squats and you start to do the first few reps.

The camera is streaming visual data to the AI model, the model is then comparing that visual data to a reference model of what an ideal squat movement should look like. It may be tracking multiple things at once. Are the knees parallel? How close are the glutes getting to the heels at the depth of each rep? Are the knees staying over the toes? Is the weight in the heels? Is the back in the correct position?

It also needs to understand all this in the concept of time. So it needs temporal data as well that it is analyzing to see if you’re speeding up, slowing down, laboring at the bottom, jerking too much, etc.

It also uses the temporal data to simplify a lot of the data collection, as it only needs to track changes in pixels from a base visual image and not every pixel every frame, but it still needs to do that in real time.

Because the AI model needs to give accurate advice and encouragement, it can’t use predictive reasoning to look forward in time, so the temporal data can only be used to compare past to present.

Then the model has to switch back and forth between cross-attention (watching the person exercise) and self-attention (interpreting that visual data to understand what to do). Based on that, it then has to decide what advice to give and at what specific time. Perhaps it coaches the person to make sure they don’t splay their knees out when lowering into the squat or it gives some encouragement as reps slow, or as there are moments of struggle within an exercise.

And if this all sounds like a lot, add in auditory data about the sounds being made (breathing, etc.) and sensor data that tracks heart rate, body temperature, etc., and you have a lot more complexity to solve for.

Then of course you must understand that Qualcomm is using a normal camera on a phone, not Lidar, IR, or a spatial recognition camera like gesture tracking systems use and they have to be able to adjust for a multitude of body shapes and sizes, with different ratios, and across a library of exercises.

Implications for Us

Qualcomm’s webinar was specifically about their model for an AI fitness trainer application, but of course, with AI being “an era and not a product”, (nod to my colleague Julian Phillips on that phrase), there are immediate implications to this type of research in AV.

The first is obviously natural, gesture-based control of AV systems, without the use of additional cameras and hardware. Most of our meeting spaces already have a camera for teleconferencing, so utilizing that camera as an edge device to also run a control application that allows for gesture control seems fairly intuitive.

The second implication is around managed services and support, where you virtual assistant can actually see the actions you are taking and recommend troubleshooting steps, etc. Because it can utilize visual data from the room, it could see if displays are on, lights are working, analyze what buttons you are pressing, and make timely recommendations.

On a wider scale, adding this type of AI training agent into any training environment or learning management system could allow for limitless possibilities on training solutions for employees that do physical tasks and have specific skillsets, and would also require creating reference data as a source of truth in the AI models visual library for comparison purposes.

Of course there are likely countless other implications here as well.

From Observer to Facilitator and Trainer

The main takeaways for me were despite the advances we have made in computer vision and analyzing images, we still have challenges with analyzing streaming media in real time. I also see the continued advancement of AI and its role from the current state of a more administrative role in our lives and meetings, to a potentially more active role of counselor and facilitator.

In fact, I have the inside track on some meeting and project management AI tools that are doing just that, but I’ll have to save that topic for my next blog…